Table of Contents

Newcomb’s Problem: Prediction vs. Free Will

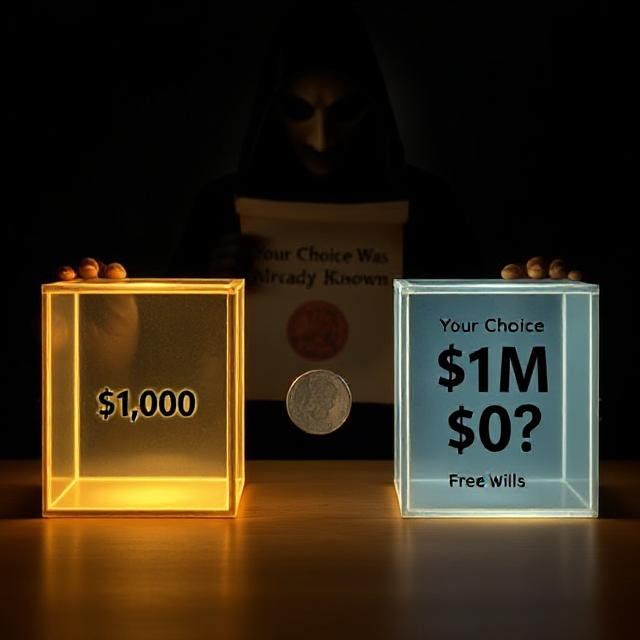

Imagine this: a mysterious predictor—perfectly accurate in all previous cases—offers you a strange choice. Before you are two boxes:

- Box A contains $1,000.

- Box B contains either $1,000,000 or nothing.

You may take only Box B, or both Box A and Box B.

The catch? The predictor has already made a decision. If they predicted you would take only Box B, then Box B contains $1,000,000. But if they predicted you would take both boxes, Box B contains nothing.

What do you choose? This is Newcomb’s Problem, a famous philosophical paradox that sits at the crossroads of free will, prediction, rationality, and decision theory.

I. Framing the Paradox

Newcomb’s Problem originated with physicist William Newcomb and was popularized by philosopher Robert Nozick. The key dilemma revolves around whether your current decision can affect the past prediction. It seems irrational to let a prediction already made determine your present choice—yet if the predictor is accurate, then your decision is, in some way, already accounted for.

There are two camps of thinkers:

- Two-boxers argue: Take both boxes! Why leave $1,000 on the table if the prediction is already fixed?

- One-boxers argue: Take only Box B! The predictor has never been wrong—choosing one box maximizes your expected gain.

Which is the truly rational move?

II. Determinism, Free Will, and the Predictor

At the heart of Newcomb’s Problem lies the tension between free will and determinism. If the predictor’s accuracy is absolute, it seems to imply a deterministic universe: your choice was inevitable, and the predictor just read the script. But if you believe in free will, it feels wrong to let a past prediction influence your current liberty.

A. The Role of Prediction

- If the predictor is truly perfect, then your sense of free will might be an illusion.

- But if free will is genuine, shouldn’t the prediction sometimes fail?

This raises an ontological puzzle: Does accurate prediction negate agency?

III. Decision Theory Perspectives

A. Causal Decision Theory (CDT)

According to CDT, you should two-box. Why? Because the contents of the boxes have already been determined. Your present action cannot influence a past event.

- Taking both boxes guarantees $1,000, and possibly $1,000,000.

- Taking only one risks walking away with nothing if Box B is empty.

B. Evidential Decision Theory (EDT)

EDT, in contrast, argues you should one-box. Why?

- Your choice is strong evidence of the predictor’s belief.

- If you choose only one, it’s likely the predictor saw that coming and left $1,000,000 for you.

C. Functional Decision Theory (FDT)

Developed more recently by Eliezer Yudkowsky and others in rationalist circles, FDT suggests:

- Choose the decision that you’d want all your copies to make in similar situations.

- One-boxing consistently leads to better outcomes.

IV. Real-Life Applications

Newcomb’s Problem may seem like science fiction, but its logic applies to many real-world scenarios:

A. Trust in Algorithms

- Should you follow a GPS that’s always been right in the past?

- Should financial traders trust predictive AIs?

B. Social Prediction and Conformity

- If society predicts your behavior, are you truly free?

- How does reputation impact what others expect of you?

C. Criminal Justice

- Predictive policing uses algorithms to forecast crimes.

- Should a prediction influence someone’s sentence before they act?

These questions echo the structure of Newcomb’s Problem.

V. The Meta-Problem: Can You Outsmart Prediction?

Let’s say you believe in radical freedom—you resolve to flip a coin or make a random move to prove you’re not predictable. But what if the predictor knows even this strategy? What if it models your meta-reasoning—your tendencies, strategies, and personality quirks?

The paradox tightens: if perfect prediction is possible, then there may be no room left for true spontaneity.

VI. Philosophical Echoes and Thought Experiments

Newcomb’s Problem is one of many mind-bending paradoxes that tug at the same thread:

- Laplace’s Demon: If a mind knew all particles’ positions and velocities, it could predict the future.

- The Prisoner’s Dilemma: Should you cooperate or defect if your partner might do the same?

- The Time Travel Paradoxes: If your future self already acted, what agency does your present self have?

All these point to an uneasy truth: our decisions might not be as free—or as rational—as we believe.

VII. What Should You Do?

The real brilliance of Newcomb’s Problem is that it defies definitive solution. Both answers seem rational, yet both rest on conflicting worldviews:

- If you believe your actions are causally independent of prior events, then two-boxing is best.

- If you believe your choice reveals who you are (and the predictor sees this), then one-boxing wins.

So, what does your decision say about you?

VIII. Final Thoughts: Freedom, Logic, and Belief

Newcomb’s Problem forces a reevaluation of some of our deepest assumptions:

- Is rationality purely about maximizing gain?

- Can belief in free will survive in a world of perfect prediction?

- What does it mean to “decide” if a prediction already knows the answer?

Whether you’re a one-boxer or a two-boxer, this paradox forces you to confront the limits of autonomy, causality, and logic. It is more than a thought experiment—it is a window into how we model ourselves as agents in an increasingly predictive world.

In the end, Newcomb’s Problem asks not just what you’ll choose, but what kind of chooser you are.